Tutorial: Hypothesis Verification for 3D Object Recognition

This tutorial aims at explaining how to do 3D object recognition in clutter by verifying model hypotheses in cluttered and heavily occluded 3D scenes. After descriptor matching, the tutorial runs one of the Correspondence Grouping algorithms available in PCL in order to cluster the set of point-to-point correspondences, determining instances of object hypotheses in the scene. On these hypotheses, the Global Hypothesis Verification algorithm is applied in order to decrease the amount of false positives.

Suggested readings and prerequisites

This tutorial is the follow-up of a previous tutorial on object recognition: 3D Object Recognition based on Correspondence Grouping To understand this tutorial, we suggest first to read and understand that tutorial.

More details on the Global Hypothesis Verification method can be found here: A. Aldoma, F. Tombari, L. Di Stefano, M. Vincze, A global hypothesis verification method for 3D object recognition, ECCV 2012

For more information on 3D Object Recognition in Clutter and on the standard feature-based recognition pipeline, we suggest this tutorial paper: A. Aldoma, Z.C. Marton, F. Tombari, W. Wohlkinger, C. Potthast, B. Zeisl, R.B. Rusu, S. Gedikli, M. Vincze, “Point Cloud Library: Three-Dimensional Object Recognition and 6 DOF Pose Estimation”, IEEE Robotics and Automation Magazine, 2012

The Code

Before starting, you should download from the GitHub folder: Correspondence Grouping the example PCD clouds used in this tutorial (milk.pcd and milk_cartoon_all_small_clorox.pcd), and place the files in the source older.

Then copy and paste the following code into your editor and save it as global_hypothesis_verification.cpp.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 351 352 353 354 355 356 357 358 359 360 361 362 363 364 365 366 367 368 369 370 371 372 373 374 375 376 377 378 379 380 381 382 383 384 385 386 387 388 389 390 391 392 393 394 395 396 397 398 399 400 401 402 403 404 405 406 407 408 409 410 411 412 413 414 415 416 417 418 419 420 421 422 423 424 425 426 427 428 429 430 431 432 433 434 435 436 437 438 439 440 441 442 443 444 445 446 447 448 449 450 451 452 453 454 455 456 457 458 459 460 461 462 463 464 465 466 467 468 469 470 471 472 473 474 475 476 477 478 479 480 481 482 483 484 485 486 487 488 489 490 491 492 493 494 495 496 497 498 499 500 501 502 503 504 505 506 507 508 509 510 511 512 513 514 515 516 517 518 519 520 521 522 523 524 525 526 527 | /*

* Software License Agreement (BSD License)

*

* Point Cloud Library (PCL) - www.pointclouds.org

* Copyright (c) 2014-, Open Perception, Inc.

*

* All rights reserved.

*

* Redistribution and use in source and binary forms, with or without

* modification, are permitted provided that the following conditions

* are met:

*

* * Redistributions of source code must retain the above copyright

* notice, this list of conditions and the following disclaimer.

* * Redistributions in binary form must reproduce the above

* copyright notice, this list of conditions and the following

* disclaimer in the documentation and/or other materials provided

* with the distribution.

* * Neither the name of the copyright holder(s) nor the names of its

* contributors may be used to endorse or promote products derived

* from this software without specific prior written permission.

*

* THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS

* "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT

* LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS

* FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE

* COPYRIGHT OWNER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT,

* INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING,

* BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES;

* LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

* CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT

* LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN

* ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE

* POSSIBILITY OF SUCH DAMAGE.

*

*/

#include <pcl/io/pcd_io.h>

#include <pcl/point_cloud.h>

#include <pcl/correspondence.h>

#include <pcl/features/normal_3d_omp.h>

#include <pcl/features/shot_omp.h>

#include <pcl/features/board.h>

#include <pcl/filters/uniform_sampling.h>

#include <pcl/recognition/cg/hough_3d.h>

#include <pcl/recognition/cg/geometric_consistency.h>

#include <pcl/recognition/hv/hv_go.h>

#include <pcl/registration/icp.h>

#include <pcl/visualization/pcl_visualizer.h>

#include <pcl/kdtree/kdtree_flann.h>

#include <pcl/kdtree/impl/kdtree_flann.hpp>

#include <pcl/common/transforms.h>

#include <pcl/console/parse.h>

typedef pcl::PointXYZRGBA PointType;

typedef pcl::Normal NormalType;

typedef pcl::ReferenceFrame RFType;

typedef pcl::SHOT352 DescriptorType;

struct CloudStyle

{

double r;

double g;

double b;

double size;

CloudStyle (double r,

double g,

double b,

double size) :

r (r),

g (g),

b (b),

size (size)

{

}

};

CloudStyle style_white (255.0, 255.0, 255.0, 4.0);

CloudStyle style_red (255.0, 0.0, 0.0, 3.0);

CloudStyle style_green (0.0, 255.0, 0.0, 5.0);

CloudStyle style_cyan (93.0, 200.0, 217.0, 4.0);

CloudStyle style_violet (255.0, 0.0, 255.0, 8.0);

std::string model_filename_;

std::string scene_filename_;

//Algorithm params

bool show_keypoints_ (false);

bool use_hough_ (true);

float model_ss_ (0.02f);

float scene_ss_ (0.02f);

float rf_rad_ (0.015f);

float descr_rad_ (0.02f);

float cg_size_ (0.01f);

float cg_thresh_ (5.0f);

int icp_max_iter_ (5);

float icp_corr_distance_ (0.005f);

float hv_resolution_ (0.005f);

float hv_occupancy_grid_resolution_ (0.01f);

float hv_clutter_reg_ (5.0f);

float hv_inlier_th_ (0.005f);

float hv_occlusion_th_ (0.01f);

float hv_rad_clutter_ (0.03f);

float hv_regularizer_ (3.0f);

float hv_rad_normals_ (0.05);

bool hv_detect_clutter_ (true);

/**

* Prints out Help message

* @param filename Runnable App Name

*/

void

showHelp (char *filename)

{

std::cout << std::endl;

std::cout << "***************************************************************************" << std::endl;

std::cout << "* *" << std::endl;

std::cout << "* Global Hypothese Verification Tutorial - Usage Guide *" << std::endl;

std::cout << "* *" << std::endl;

std::cout << "***************************************************************************" << std::endl << std::endl;

std::cout << "Usage: " << filename << " model_filename.pcd scene_filename.pcd [Options]" << std::endl << std::endl;

std::cout << "Options:" << std::endl;

std::cout << " -h: Show this help." << std::endl;

std::cout << " -k: Show keypoints." << std::endl;

std::cout << " --algorithm (Hough|GC): Clustering algorithm used (default Hough)." << std::endl;

std::cout << " --model_ss val: Model uniform sampling radius (default " << model_ss_ << ")" << std::endl;

std::cout << " --scene_ss val: Scene uniform sampling radius (default " << scene_ss_ << ")" << std::endl;

std::cout << " --rf_rad val: Reference frame radius (default " << rf_rad_ << ")" << std::endl;

std::cout << " --descr_rad val: Descriptor radius (default " << descr_rad_ << ")" << std::endl;

std::cout << " --cg_size val: Cluster size (default " << cg_size_ << ")" << std::endl;

std::cout << " --cg_thresh val: Clustering threshold (default " << cg_thresh_ << ")" << std::endl << std::endl;

std::cout << " --icp_max_iter val: ICP max iterations number (default " << icp_max_iter_ << ")" << std::endl;

std::cout << " --icp_corr_distance val: ICP correspondence distance (default " << icp_corr_distance_ << ")" << std::endl << std::endl;

std::cout << " --hv_clutter_reg val: Clutter Regularizer (default " << hv_clutter_reg_ << ")" << std::endl;

std::cout << " --hv_inlier_th val: Inlier threshold (default " << hv_inlier_th_ << ")" << std::endl;

std::cout << " --hv_occlusion_th val: Occlusion threshold (default " << hv_occlusion_th_ << ")" << std::endl;

std::cout << " --hv_rad_clutter val: Clutter radius (default " << hv_rad_clutter_ << ")" << std::endl;

std::cout << " --hv_regularizer val: Regularizer value (default " << hv_regularizer_ << ")" << std::endl;

std::cout << " --hv_rad_normals val: Normals radius (default " << hv_rad_normals_ << ")" << std::endl;

std::cout << " --hv_detect_clutter val: TRUE if clutter detect enabled (default " << hv_detect_clutter_ << ")" << std::endl << std::endl;

}

/**

* Parses Command Line Arguments (Argc,Argv)

* @param argc

* @param argv

*/

void

parseCommandLine (int argc,

char *argv[])

{

//Show help

if (pcl::console::find_switch (argc, argv, "-h"))

{

showHelp (argv[0]);

exit (0);

}

//Model & scene filenames

std::vector<int> filenames;

filenames = pcl::console::parse_file_extension_argument (argc, argv, ".pcd");

if (filenames.size () != 2)

{

std::cout << "Filenames missing.\n";

showHelp (argv[0]);

exit (-1);

}

model_filename_ = argv[filenames[0]];

scene_filename_ = argv[filenames[1]];

//Program behavior

if (pcl::console::find_switch (argc, argv, "-k"))

{

show_keypoints_ = true;

}

std::string used_algorithm;

if (pcl::console::parse_argument (argc, argv, "--algorithm", used_algorithm) != -1)

{

if (used_algorithm.compare ("Hough") == 0)

{

use_hough_ = true;

}

else if (used_algorithm.compare ("GC") == 0)

{

use_hough_ = false;

}

else

{

std::cout << "Wrong algorithm name.\n";

showHelp (argv[0]);

exit (-1);

}

}

//General parameters

pcl::console::parse_argument (argc, argv, "--model_ss", model_ss_);

pcl::console::parse_argument (argc, argv, "--scene_ss", scene_ss_);

pcl::console::parse_argument (argc, argv, "--rf_rad", rf_rad_);

pcl::console::parse_argument (argc, argv, "--descr_rad", descr_rad_);

pcl::console::parse_argument (argc, argv, "--cg_size", cg_size_);

pcl::console::parse_argument (argc, argv, "--cg_thresh", cg_thresh_);

pcl::console::parse_argument (argc, argv, "--icp_max_iter", icp_max_iter_);

pcl::console::parse_argument (argc, argv, "--icp_corr_distance", icp_corr_distance_);

pcl::console::parse_argument (argc, argv, "--hv_clutter_reg", hv_clutter_reg_);

pcl::console::parse_argument (argc, argv, "--hv_inlier_th", hv_inlier_th_);

pcl::console::parse_argument (argc, argv, "--hv_occlusion_th", hv_occlusion_th_);

pcl::console::parse_argument (argc, argv, "--hv_rad_clutter", hv_rad_clutter_);

pcl::console::parse_argument (argc, argv, "--hv_regularizer", hv_regularizer_);

pcl::console::parse_argument (argc, argv, "--hv_rad_normals", hv_rad_normals_);

pcl::console::parse_argument (argc, argv, "--hv_detect_clutter", hv_detect_clutter_);

}

int

main (int argc,

char *argv[])

{

parseCommandLine (argc, argv);

pcl::PointCloud<PointType>::Ptr model (new pcl::PointCloud<PointType> ());

pcl::PointCloud<PointType>::Ptr model_keypoints (new pcl::PointCloud<PointType> ());

pcl::PointCloud<PointType>::Ptr scene (new pcl::PointCloud<PointType> ());

pcl::PointCloud<PointType>::Ptr scene_keypoints (new pcl::PointCloud<PointType> ());

pcl::PointCloud<NormalType>::Ptr model_normals (new pcl::PointCloud<NormalType> ());

pcl::PointCloud<NormalType>::Ptr scene_normals (new pcl::PointCloud<NormalType> ());

pcl::PointCloud<DescriptorType>::Ptr model_descriptors (new pcl::PointCloud<DescriptorType> ());

pcl::PointCloud<DescriptorType>::Ptr scene_descriptors (new pcl::PointCloud<DescriptorType> ());

/**

* Load Clouds

*/

if (pcl::io::loadPCDFile (model_filename_, *model) < 0)

{

std::cout << "Error loading model cloud." << std::endl;

showHelp (argv[0]);

return (-1);

}

if (pcl::io::loadPCDFile (scene_filename_, *scene) < 0)

{

std::cout << "Error loading scene cloud." << std::endl;

showHelp (argv[0]);

return (-1);

}

/**

* Compute Normals

*/

pcl::NormalEstimationOMP<PointType, NormalType> norm_est;

norm_est.setKSearch (10);

norm_est.setInputCloud (model);

norm_est.compute (*model_normals);

norm_est.setInputCloud (scene);

norm_est.compute (*scene_normals);

/**

* Downsample Clouds to Extract keypoints

*/

pcl::UniformSampling<PointType> uniform_sampling;

uniform_sampling.setInputCloud (model);

uniform_sampling.setRadiusSearch (model_ss_);

uniform_sampling.filter (*model_keypoints);

std::cout << "Model total points: " << model->size () << "; Selected Keypoints: " << model_keypoints->size () << std::endl;

uniform_sampling.setInputCloud (scene);

uniform_sampling.setRadiusSearch (scene_ss_);

uniform_sampling.filter (*scene_keypoints);

std::cout << "Scene total points: " << scene->size () << "; Selected Keypoints: " << scene_keypoints->size () << std::endl;

/**

* Compute Descriptor for keypoints

*/

pcl::SHOTEstimationOMP<PointType, NormalType, DescriptorType> descr_est;

descr_est.setRadiusSearch (descr_rad_);

descr_est.setInputCloud (model_keypoints);

descr_est.setInputNormals (model_normals);

descr_est.setSearchSurface (model);

descr_est.compute (*model_descriptors);

descr_est.setInputCloud (scene_keypoints);

descr_est.setInputNormals (scene_normals);

descr_est.setSearchSurface (scene);

descr_est.compute (*scene_descriptors);

/**

* Find Model-Scene Correspondences with KdTree

*/

pcl::CorrespondencesPtr model_scene_corrs (new pcl::Correspondences ());

pcl::KdTreeFLANN<DescriptorType> match_search;

match_search.setInputCloud (model_descriptors);

std::vector<int> model_good_keypoints_indices;

std::vector<int> scene_good_keypoints_indices;

for (std::size_t i = 0; i < scene_descriptors->size (); ++i)

{

std::vector<int> neigh_indices (1);

std::vector<float> neigh_sqr_dists (1);

if (!std::isfinite (scene_descriptors->at (i).descriptor[0])) //skipping NaNs

{

continue;

}

int found_neighs = match_search.nearestKSearch (scene_descriptors->at (i), 1, neigh_indices, neigh_sqr_dists);

if (found_neighs == 1 && neigh_sqr_dists[0] < 0.25f)

{

pcl::Correspondence corr (neigh_indices[0], static_cast<int> (i), neigh_sqr_dists[0]);

model_scene_corrs->push_back (corr);

model_good_keypoints_indices.push_back (corr.index_query);

scene_good_keypoints_indices.push_back (corr.index_match);

}

}

pcl::PointCloud<PointType>::Ptr model_good_kp (new pcl::PointCloud<PointType> ());

pcl::PointCloud<PointType>::Ptr scene_good_kp (new pcl::PointCloud<PointType> ());

pcl::copyPointCloud (*model_keypoints, model_good_keypoints_indices, *model_good_kp);

pcl::copyPointCloud (*scene_keypoints, scene_good_keypoints_indices, *scene_good_kp);

std::cout << "Correspondences found: " << model_scene_corrs->size () << std::endl;

/**

* Clustering

*/

std::vector<Eigen::Matrix4f, Eigen::aligned_allocator<Eigen::Matrix4f> > rototranslations;

std::vector < pcl::Correspondences > clustered_corrs;

if (use_hough_)

{

pcl::PointCloud<RFType>::Ptr model_rf (new pcl::PointCloud<RFType> ());

pcl::PointCloud<RFType>::Ptr scene_rf (new pcl::PointCloud<RFType> ());

pcl::BOARDLocalReferenceFrameEstimation<PointType, NormalType, RFType> rf_est;

rf_est.setFindHoles (true);

rf_est.setRadiusSearch (rf_rad_);

rf_est.setInputCloud (model_keypoints);

rf_est.setInputNormals (model_normals);

rf_est.setSearchSurface (model);

rf_est.compute (*model_rf);

rf_est.setInputCloud (scene_keypoints);

rf_est.setInputNormals (scene_normals);

rf_est.setSearchSurface (scene);

rf_est.compute (*scene_rf);

// Clustering

pcl::Hough3DGrouping<PointType, PointType, RFType, RFType> clusterer;

clusterer.setHoughBinSize (cg_size_);

clusterer.setHoughThreshold (cg_thresh_);

clusterer.setUseInterpolation (true);

clusterer.setUseDistanceWeight (false);

clusterer.setInputCloud (model_keypoints);

clusterer.setInputRf (model_rf);

clusterer.setSceneCloud (scene_keypoints);

clusterer.setSceneRf (scene_rf);

clusterer.setModelSceneCorrespondences (model_scene_corrs);

clusterer.recognize (rototranslations, clustered_corrs);

}

else

{

pcl::GeometricConsistencyGrouping<PointType, PointType> gc_clusterer;

gc_clusterer.setGCSize (cg_size_);

gc_clusterer.setGCThreshold (cg_thresh_);

gc_clusterer.setInputCloud (model_keypoints);

gc_clusterer.setSceneCloud (scene_keypoints);

gc_clusterer.setModelSceneCorrespondences (model_scene_corrs);

gc_clusterer.recognize (rototranslations, clustered_corrs);

}

/**

* Stop if no instances

*/

if (rototranslations.size () <= 0)

{

std::cout << "*** No instances found! ***" << std::endl;

return (0);

}

else

{

std::cout << "Recognized Instances: " << rototranslations.size () << std::endl << std::endl;

}

/**

* Generates clouds for each instances found

*/

std::vector<pcl::PointCloud<PointType>::ConstPtr> instances;

for (std::size_t i = 0; i < rototranslations.size (); ++i)

{

pcl::PointCloud<PointType>::Ptr rotated_model (new pcl::PointCloud<PointType> ());

pcl::transformPointCloud (*model, *rotated_model, rototranslations[i]);

instances.push_back (rotated_model);

}

/**

* ICP

*/

std::vector<pcl::PointCloud<PointType>::ConstPtr> registered_instances;

if (true)

{

std::cout << "--- ICP ---------" << std::endl;

for (std::size_t i = 0; i < rototranslations.size (); ++i)

{

pcl::IterativeClosestPoint<PointType, PointType> icp;

icp.setMaximumIterations (icp_max_iter_);

icp.setMaxCorrespondenceDistance (icp_corr_distance_);

icp.setInputTarget (scene);

icp.setInputSource (instances[i]);

pcl::PointCloud<PointType>::Ptr registered (new pcl::PointCloud<PointType>);

icp.align (*registered);

registered_instances.push_back (registered);

std::cout << "Instance " << i << " ";

if (icp.hasConverged ())

{

std::cout << "Aligned!" << std::endl;

}

else

{

std::cout << "Not Aligned!" << std::endl;

}

}

std::cout << "-----------------" << std::endl << std::endl;

}

/**

* Hypothesis Verification

*/

std::cout << "--- Hypotheses Verification ---" << std::endl;

std::vector<bool> hypotheses_mask; // Mask Vector to identify positive hypotheses

pcl::GlobalHypothesesVerification<PointType, PointType> GoHv;

GoHv.setSceneCloud (scene); // Scene Cloud

GoHv.addModels (registered_instances, true); //Models to verify

GoHv.setResolution (hv_resolution_);

GoHv.setResolutionOccupancyGrid (hv_occupancy_grid_resolution_);

GoHv.setInlierThreshold (hv_inlier_th_);

GoHv.setOcclusionThreshold (hv_occlusion_th_);

GoHv.setRegularizer (hv_regularizer_);

GoHv.setRadiusClutter (hv_rad_clutter_);

GoHv.setClutterRegularizer (hv_clutter_reg_);

GoHv.setDetectClutter (hv_detect_clutter_);

GoHv.setRadiusNormals (hv_rad_normals_);

GoHv.verify ();

GoHv.getMask (hypotheses_mask); // i-element TRUE if hvModels[i] verifies hypotheses

for (int i = 0; i < hypotheses_mask.size (); i++)

{

if (hypotheses_mask[i])

{

std::cout << "Instance " << i << " is GOOD! <---" << std::endl;

}

else

{

std::cout << "Instance " << i << " is bad!" << std::endl;

}

}

std::cout << "-------------------------------" << std::endl;

/**

* Visualization

*/

pcl::visualization::PCLVisualizer viewer ("Hypotheses Verification");

viewer.addPointCloud (scene, "scene_cloud");

pcl::PointCloud<PointType>::Ptr off_scene_model (new pcl::PointCloud<PointType> ());

pcl::PointCloud<PointType>::Ptr off_scene_model_keypoints (new pcl::PointCloud<PointType> ());

pcl::PointCloud<PointType>::Ptr off_model_good_kp (new pcl::PointCloud<PointType> ());

pcl::transformPointCloud (*model, *off_scene_model, Eigen::Vector3f (-1, 0, 0), Eigen::Quaternionf (1, 0, 0, 0));

pcl::transformPointCloud (*model_keypoints, *off_scene_model_keypoints, Eigen::Vector3f (-1, 0, 0), Eigen::Quaternionf (1, 0, 0, 0));

pcl::transformPointCloud (*model_good_kp, *off_model_good_kp, Eigen::Vector3f (-1, 0, 0), Eigen::Quaternionf (1, 0, 0, 0));

if (show_keypoints_)

{

CloudStyle modelStyle = style_white;

pcl::visualization::PointCloudColorHandlerCustom<PointType> off_scene_model_color_handler (off_scene_model, modelStyle.r, modelStyle.g, modelStyle.b);

viewer.addPointCloud (off_scene_model, off_scene_model_color_handler, "off_scene_model");

viewer.setPointCloudRenderingProperties (pcl::visualization::PCL_VISUALIZER_POINT_SIZE, modelStyle.size, "off_scene_model");

}

if (show_keypoints_)

{

CloudStyle goodKeypointStyle = style_violet;

pcl::visualization::PointCloudColorHandlerCustom<PointType> model_good_keypoints_color_handler (off_model_good_kp, goodKeypointStyle.r, goodKeypointStyle.g,

goodKeypointStyle.b);

viewer.addPointCloud (off_model_good_kp, model_good_keypoints_color_handler, "model_good_keypoints");

viewer.setPointCloudRenderingProperties (pcl::visualization::PCL_VISUALIZER_POINT_SIZE, goodKeypointStyle.size, "model_good_keypoints");

pcl::visualization::PointCloudColorHandlerCustom<PointType> scene_good_keypoints_color_handler (scene_good_kp, goodKeypointStyle.r, goodKeypointStyle.g,

goodKeypointStyle.b);

viewer.addPointCloud (scene_good_kp, scene_good_keypoints_color_handler, "scene_good_keypoints");

viewer.setPointCloudRenderingProperties (pcl::visualization::PCL_VISUALIZER_POINT_SIZE, goodKeypointStyle.size, "scene_good_keypoints");

}

for (std::size_t i = 0; i < instances.size (); ++i)

{

std::stringstream ss_instance;

ss_instance << "instance_" << i;

CloudStyle clusterStyle = style_red;

pcl::visualization::PointCloudColorHandlerCustom<PointType> instance_color_handler (instances[i], clusterStyle.r, clusterStyle.g, clusterStyle.b);

viewer.addPointCloud (instances[i], instance_color_handler, ss_instance.str ());

viewer.setPointCloudRenderingProperties (pcl::visualization::PCL_VISUALIZER_POINT_SIZE, clusterStyle.size, ss_instance.str ());

CloudStyle registeredStyles = hypotheses_mask[i] ? style_green : style_cyan;

ss_instance << "_registered" << std::endl;

pcl::visualization::PointCloudColorHandlerCustom<PointType> registered_instance_color_handler (registered_instances[i], registeredStyles.r,

registeredStyles.g, registeredStyles.b);

viewer.addPointCloud (registered_instances[i], registered_instance_color_handler, ss_instance.str ());

viewer.setPointCloudRenderingProperties (pcl::visualization::PCL_VISUALIZER_POINT_SIZE, registeredStyles.size, ss_instance.str ());

}

while (!viewer.wasStopped ())

{

viewer.spinOnce ();

}

return (0);

}

|

Walkthrough

Take a look at the various parts of the code to see how it works.

Input Parameters

bool hv_detect_clutter_ (true);

/**

* Prints out Help message

* @param filename Runnable App Name

*/

}

/**

* Parses Command Line Arguments (Argc,Argv)

* @param argc

* @param argv

*/

void

showHelp function prints out the input parameters accepted by the program. parseCommandLine binds the user input with program parameters.

The only two mandatory parameters are model_filename and scene_filename (all other parameters are initialized with a default value).

Other usefuls commands are:

--algorithm (Hough|GC)used to switch clustering algorithm. See 3D Object Recognition based on Correspondence Grouping.-kshows the keypoints used to compute the correspondences

Hypotheses Verification parameters are:

--hv_clutter_reg val: Clutter Regularizer (default 5.0)--hv_inlier_th val: Inlier threshold (default 0.005)--hv_occlusion_th val: Occlusion threshold (default 0.01)--hv_rad_clutter val: Clutter radius (default 0.03)--hv_regularizer val: Regularizer value (default 3.0)--hv_rad_normals val: Normals radius (default 0.05)--hv_detect_clutter val: TRUE if clutter detect enabled (default true)

More details on the Global Hypothesis Verification parameters can be found here: A. Aldoma, F. Tombari, L. Di Stefano, M. Vincze, A global hypothesis verification method for 3D object recognition, ECCV 2012.

Helpers

struct CloudStyle

{

double r;

double g;

double b;

double size;

CloudStyle (double r,

double g,

double b,

double size) :

r (r),

g (g),

b (b),

size (size)

{

}

};

CloudStyle style_white (255.0, 255.0, 255.0, 4.0);

CloudStyle style_red (255.0, 0.0, 0.0, 3.0);

CloudStyle style_green (0.0, 255.0, 0.0, 5.0);

CloudStyle style_cyan (93.0, 200.0, 217.0, 4.0);

CloudStyle style_violet (255.0, 0.0, 255.0, 8.0);

This simple struct is used to create Color presets for the clouds being visualized.

Clustering

The code below implements a full Clustering Pipeline: the input of the pipeline is a pair of point clouds (the model and the scene), and the output is

std::vector<Eigen::Matrix4f, Eigen::aligned_allocator<Eigen::Matrix4f> > rototranslations;

rototraslations represents a list of coarsely transformed models (“object hypotheses”) in the scene.

Take a look at the full pipeline:

}

/**

* Compute Normals

*/

pcl::NormalEstimationOMP<PointType, NormalType> norm_est;

norm_est.setKSearch (10);

norm_est.setInputCloud (model);

norm_est.compute (*model_normals);

norm_est.setInputCloud (scene);

norm_est.compute (*scene_normals);

/**

* Downsample Clouds to Extract keypoints

*/

pcl::UniformSampling<PointType> uniform_sampling;

uniform_sampling.setInputCloud (model);

uniform_sampling.setRadiusSearch (model_ss_);

uniform_sampling.filter (*model_keypoints);

std::cout << "Model total points: " << model->size () << "; Selected Keypoints: " << model_keypoints->size () << std::endl;

uniform_sampling.setInputCloud (scene);

uniform_sampling.setRadiusSearch (scene_ss_);

uniform_sampling.filter (*scene_keypoints);

std::cout << "Scene total points: " << scene->size () << "; Selected Keypoints: " << scene_keypoints->size () << std::endl;

/**

* Compute Descriptor for keypoints

*/

pcl::SHOTEstimationOMP<PointType, NormalType, DescriptorType> descr_est;

descr_est.setRadiusSearch (descr_rad_);

descr_est.setInputCloud (model_keypoints);

descr_est.setInputNormals (model_normals);

descr_est.setSearchSurface (model);

descr_est.compute (*model_descriptors);

descr_est.setInputCloud (scene_keypoints);

descr_est.setInputNormals (scene_normals);

descr_est.setSearchSurface (scene);

descr_est.compute (*scene_descriptors);

/**

* Find Model-Scene Correspondences with KdTree

*/

pcl::CorrespondencesPtr model_scene_corrs (new pcl::Correspondences ());

pcl::KdTreeFLANN<DescriptorType> match_search;

match_search.setInputCloud (model_descriptors);

std::vector<int> model_good_keypoints_indices;

std::vector<int> scene_good_keypoints_indices;

for (std::size_t i = 0; i < scene_descriptors->size (); ++i)

{

std::vector<int> neigh_indices (1);

std::vector<float> neigh_sqr_dists (1);

if (!std::isfinite (scene_descriptors->at (i).descriptor[0])) //skipping NaNs

{

continue;

}

int found_neighs = match_search.nearestKSearch (scene_descriptors->at (i), 1, neigh_indices, neigh_sqr_dists);

if (found_neighs == 1 && neigh_sqr_dists[0] < 0.25f)

{

pcl::Correspondence corr (neigh_indices[0], static_cast<int> (i), neigh_sqr_dists[0]);

model_scene_corrs->push_back (corr);

model_good_keypoints_indices.push_back (corr.index_query);

scene_good_keypoints_indices.push_back (corr.index_match);

}

}

pcl::PointCloud<PointType>::Ptr model_good_kp (new pcl::PointCloud<PointType> ());

pcl::PointCloud<PointType>::Ptr scene_good_kp (new pcl::PointCloud<PointType> ());

pcl::copyPointCloud (*model_keypoints, model_good_keypoints_indices, *model_good_kp);

pcl::copyPointCloud (*scene_keypoints, scene_good_keypoints_indices, *scene_good_kp);

std::cout << "Correspondences found: " << model_scene_corrs->size () << std::endl;

/**

* Clustering

*/

std::vector<Eigen::Matrix4f, Eigen::aligned_allocator<Eigen::Matrix4f> > rototranslations;

std::vector < pcl::Correspondences > clustered_corrs;

if (use_hough_)

{

pcl::PointCloud<RFType>::Ptr model_rf (new pcl::PointCloud<RFType> ());

pcl::PointCloud<RFType>::Ptr scene_rf (new pcl::PointCloud<RFType> ());

pcl::BOARDLocalReferenceFrameEstimation<PointType, NormalType, RFType> rf_est;

rf_est.setFindHoles (true);

rf_est.setRadiusSearch (rf_rad_);

rf_est.setInputCloud (model_keypoints);

rf_est.setInputNormals (model_normals);

rf_est.setSearchSurface (model);

rf_est.compute (*model_rf);

rf_est.setInputCloud (scene_keypoints);

rf_est.setInputNormals (scene_normals);

rf_est.setSearchSurface (scene);

rf_est.compute (*scene_rf);

// Clustering

pcl::Hough3DGrouping<PointType, PointType, RFType, RFType> clusterer;

clusterer.setHoughBinSize (cg_size_);

clusterer.setHoughThreshold (cg_thresh_);

clusterer.setUseInterpolation (true);

clusterer.setUseDistanceWeight (false);

clusterer.setInputCloud (model_keypoints);

clusterer.setInputRf (model_rf);

clusterer.setSceneCloud (scene_keypoints);

clusterer.setSceneRf (scene_rf);

clusterer.setModelSceneCorrespondences (model_scene_corrs);

clusterer.recognize (rototranslations, clustered_corrs);

}

else

{

pcl::GeometricConsistencyGrouping<PointType, PointType> gc_clusterer;

gc_clusterer.setGCSize (cg_size_);

gc_clusterer.setGCThreshold (cg_thresh_);

gc_clusterer.setInputCloud (model_keypoints);

gc_clusterer.setSceneCloud (scene_keypoints);

gc_clusterer.setModelSceneCorrespondences (model_scene_corrs);

gc_clusterer.recognize (rototranslations, clustered_corrs);

}

/**

For a full explanation of the above code see 3D Object Recognition based on Correspondence Grouping.

Model-in-Scene Projection

To improve the coarse transformation associated to each object hypothesis, we apply some ICP iterations.

We create a instances list to store the “coarse” transformations :

*/

std::vector<pcl::PointCloud<PointType>::ConstPtr> instances;

for (std::size_t i = 0; i < rototranslations.size (); ++i)

{

pcl::PointCloud<PointType>::Ptr rotated_model (new pcl::PointCloud<PointType> ());

pcl::transformPointCloud (*model, *rotated_model, rototranslations[i]);

instances.push_back (rotated_model);

}

/**

then, we run ICP on the instances wrt. the scene to obtain the registered_instances:

*/

std::vector<pcl::PointCloud<PointType>::ConstPtr> registered_instances;

if (true)

{

std::cout << "--- ICP ---------" << std::endl;

for (std::size_t i = 0; i < rototranslations.size (); ++i)

{

pcl::IterativeClosestPoint<PointType, PointType> icp;

icp.setMaximumIterations (icp_max_iter_);

icp.setMaxCorrespondenceDistance (icp_corr_distance_);

icp.setInputTarget (scene);

icp.setInputSource (instances[i]);

pcl::PointCloud<PointType>::Ptr registered (new pcl::PointCloud<PointType>);

icp.align (*registered);

registered_instances.push_back (registered);

std::cout << "Instance " << i << " ";

if (icp.hasConverged ())

{

std::cout << "Aligned!" << std::endl;

}

else

{

std::cout << "Not Aligned!" << std::endl;

}

}

std::cout << "-----------------" << std::endl << std::endl;

}

/**

Hypotheses Verification

*/

std::cout << "--- Hypotheses Verification ---" << std::endl;

std::vector<bool> hypotheses_mask; // Mask Vector to identify positive hypotheses

pcl::GlobalHypothesesVerification<PointType, PointType> GoHv;

GoHv.setSceneCloud (scene); // Scene Cloud

GoHv.addModels (registered_instances, true); //Models to verify

GoHv.setResolution (hv_resolution_);

GoHv.setResolutionOccupancyGrid (hv_occupancy_grid_resolution_);

GoHv.setInlierThreshold (hv_inlier_th_);

GoHv.setOcclusionThreshold (hv_occlusion_th_);

GoHv.setRegularizer (hv_regularizer_);

GoHv.setRadiusClutter (hv_rad_clutter_);

GoHv.setClutterRegularizer (hv_clutter_reg_);

GoHv.setDetectClutter (hv_detect_clutter_);

GoHv.setRadiusNormals (hv_rad_normals_);

GoHv.verify ();

GoHv.getMask (hypotheses_mask); // i-element TRUE if hvModels[i] verifies hypotheses

for (int i = 0; i < hypotheses_mask.size (); i++)

{

if (hypotheses_mask[i])

{

std::cout << "Instance " << i << " is GOOD! <---" << std::endl;

}

else

{

std::cout << "Instance " << i << " is bad!" << std::endl;

}

}

std::cout << "-------------------------------" << std::endl;

GlobalHypothesesVerification takes as input a list of registered_instances and a scene so we can verify() them

to get a hypotheses_mask: this is a bool array where hypotheses_mask[i] is TRUE if registered_instances[i] is a

verified hypothesis, FALSE if it has been classified as a False Positive (hence, must be rejected).

Visualization

The first part of the Visualization code section is pretty simple, with -k options the program displays goog keypoints in model and in scene

with a styleViolet color.

Later we iterate on instances, and each instances[i] will be displayed in Viewer with a styleRed color.

Each registered_instances[i] will be displayed with two optional colors: styleGreen if the current instance is verified (hypotheses_mask[i] is TRUE), styleCyan otherwise.

* Visualization

*/

pcl::visualization::PCLVisualizer viewer ("Hypotheses Verification");

viewer.addPointCloud (scene, "scene_cloud");

pcl::PointCloud<PointType>::Ptr off_scene_model (new pcl::PointCloud<PointType> ());

pcl::PointCloud<PointType>::Ptr off_scene_model_keypoints (new pcl::PointCloud<PointType> ());

pcl::PointCloud<PointType>::Ptr off_model_good_kp (new pcl::PointCloud<PointType> ());

pcl::transformPointCloud (*model, *off_scene_model, Eigen::Vector3f (-1, 0, 0), Eigen::Quaternionf (1, 0, 0, 0));

pcl::transformPointCloud (*model_keypoints, *off_scene_model_keypoints, Eigen::Vector3f (-1, 0, 0), Eigen::Quaternionf (1, 0, 0, 0));

pcl::transformPointCloud (*model_good_kp, *off_model_good_kp, Eigen::Vector3f (-1, 0, 0), Eigen::Quaternionf (1, 0, 0, 0));

if (show_keypoints_)

{

CloudStyle modelStyle = style_white;

pcl::visualization::PointCloudColorHandlerCustom<PointType> off_scene_model_color_handler (off_scene_model, modelStyle.r, modelStyle.g, modelStyle.b);

viewer.addPointCloud (off_scene_model, off_scene_model_color_handler, "off_scene_model");

viewer.setPointCloudRenderingProperties (pcl::visualization::PCL_VISUALIZER_POINT_SIZE, modelStyle.size, "off_scene_model");

}

if (show_keypoints_)

{

CloudStyle goodKeypointStyle = style_violet;

pcl::visualization::PointCloudColorHandlerCustom<PointType> model_good_keypoints_color_handler (off_model_good_kp, goodKeypointStyle.r, goodKeypointStyle.g,

goodKeypointStyle.b);

viewer.addPointCloud (off_model_good_kp, model_good_keypoints_color_handler, "model_good_keypoints");

viewer.setPointCloudRenderingProperties (pcl::visualization::PCL_VISUALIZER_POINT_SIZE, goodKeypointStyle.size, "model_good_keypoints");

pcl::visualization::PointCloudColorHandlerCustom<PointType> scene_good_keypoints_color_handler (scene_good_kp, goodKeypointStyle.r, goodKeypointStyle.g,

goodKeypointStyle.b);

viewer.addPointCloud (scene_good_kp, scene_good_keypoints_color_handler, "scene_good_keypoints");

viewer.setPointCloudRenderingProperties (pcl::visualization::PCL_VISUALIZER_POINT_SIZE, goodKeypointStyle.size, "scene_good_keypoints");

}

for (std::size_t i = 0; i < instances.size (); ++i)

{

std::stringstream ss_instance;

ss_instance << "instance_" << i;

CloudStyle clusterStyle = style_red;

pcl::visualization::PointCloudColorHandlerCustom<PointType> instance_color_handler (instances[i], clusterStyle.r, clusterStyle.g, clusterStyle.b);

viewer.addPointCloud (instances[i], instance_color_handler, ss_instance.str ());

viewer.setPointCloudRenderingProperties (pcl::visualization::PCL_VISUALIZER_POINT_SIZE, clusterStyle.size, ss_instance.str ());

CloudStyle registeredStyles = hypotheses_mask[i] ? style_green : style_cyan;

ss_instance << "_registered" << std::endl;

pcl::visualization::PointCloudColorHandlerCustom<PointType> registered_instance_color_handler (registered_instances[i], registeredStyles.r,

registeredStyles.g, registeredStyles.b);

viewer.addPointCloud (registered_instances[i], registered_instance_color_handler, ss_instance.str ());

viewer.setPointCloudRenderingProperties (pcl::visualization::PCL_VISUALIZER_POINT_SIZE, registeredStyles.size, ss_instance.str ());

}

while (!viewer.wasStopped ())

{

viewer.spinOnce ();

}

Compiling and running the program

Create a CMakeLists.txt file and add the following lines into it:

1 2 3 4 5 6 7 8 9 10 11 12 13 | cmake_minimum_required(VERSION 2.6 FATAL_ERROR)

project(global_hypothesis_verification)

#Pcl

find_package(PCL 1.7 REQUIRED)

include_directories(${PCL_INCLUDE_DIRS})

link_directories(${PCL_LIBRARY_DIRS})

add_definitions(${PCL_DEFINITIONS})

add_executable (global_hypothesis_verification global_hypothesis_verification.cpp)

target_link_libraries (global_hypothesis_verification ${PCL_LIBRARIES})

|

After you have created the executable, you can then launch it following this example:

>>> ./global_hypothesis_verification milk.pcd milk_cartoon_all_small_clorox.pcd

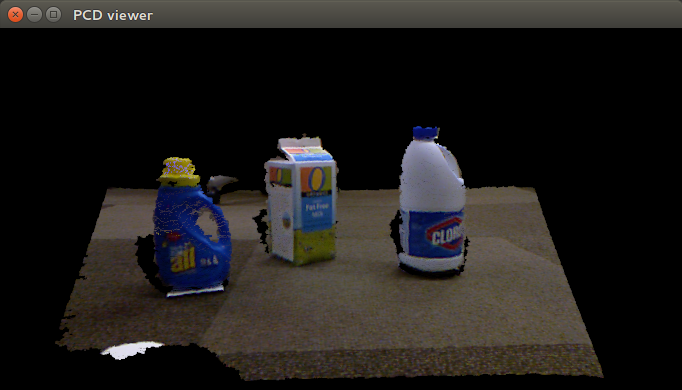

Original Scene Image

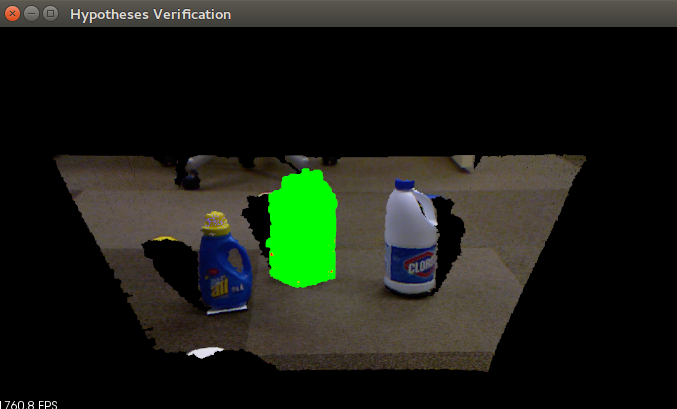

Valid Hypothesis (Green) with simple parameters

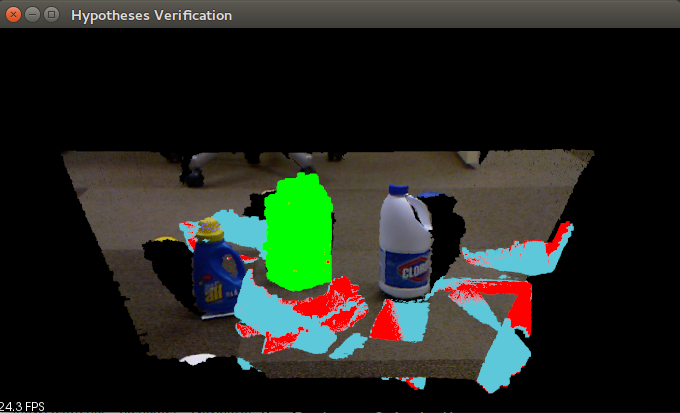

You can simulate more false positives by using a larger bin size parameter for the Hough Voting Correspondence Grouping algorithm:

>>> ./global_hypothesis_verification milk.pcd milk_cartoon_all_small_clorox.pcd --cg_size 0.035

Valid Hypothesis (Green) among 9 false positives